我们自己对yolov5检测模型做了集成,在上板计算精度时,损失有点大,至少降了10个点,请问会是什么原因呢?下面是我们的参数配置。

1、mapper目录下面

yaml文件配置如下:

# Copyright (c) 2020 Horizon Robotics.All Rights Reserved.## The material in this file is confidential and contains trade secrets# of Horizon Robotics Inc. This is proprietary information owned by# Horizon Robotics Inc. No part of this work may be disclosed,# reproduced, copied, transmitted, or used in any way for any purpose,# without the express written permission of Horizon Robotics Inc.# 模型转化相关的参数model_parameters: # 浮点网络数据模型文件 onnx_model: './yolov5s-visible-lwir.onnx' # 指定模型转换过程中是否输出各层的中间结果,如果为True,则输出所有层的中间输出结果, layer_out_dump: False # 用于设置上板模型输出的layout, 支持NHWC和NCHW, 输入None则使用模型默认格式 output_layout: NHWC # 日志文件的输出控制参数, # debug输出模型转换的详细信息 # info只输出关键信息 # warn输出警告和错误级别以上的信息 log_level: 'debug' # 模型转换输出的结果的存放目录 working_dir: 'model_output' # 模型转换输出的用于上板执行的模型文件的名称前缀 output_model_file_prefix: 'yolov5_mutil_modal_back'# 模型输入相关参数, 若输入多个节点, 则应使用';'进行分隔, 使用默认缺省设置则写Noneinput_parameters: # (可不填) 模型输入的节点名称, 此名称应与模型文件中的名称一致, 否则会报错, 不填则会使用模型文件中的节点名称 input_name: 'img;lwir' # 网络实际执行时,输入给网络的数据格式,包括 nv12/rgbp/bgrp/yuv444_128/gray/featuremap, # 如果输入的数据为yuv444_128, 模型训练用的是rgbp,则hb_mapper将自动插入YUV到RGBP(NCHW)转化操作 input_type_rt: 'yuv444_128;yuv444_128' # 网络训练时输入的数据格式,可选的值为rgbp/bgrp/gray/featuremap/yuv444_128 input_type_train: 'rgbp;rgbp' # 网络输入的预处理方法,主要有以下几种: # no_preprocess 不做任何操作 # mean_file 减去从通道均值文件(mean_file)得到的均值 # data_scale 对图像像素乘以data_scale系数 # mean_file_and_scale 减去通道均值后再乘以scale系数 norm_type: "data_scale;data_scale" # (可不填) 模型网络的输入大小, 以'x'分隔, 不填则会使用模型文件中的网络输入大小 input_shape: '1x3x672x672;1x3x672x672' # 图像减去的均值存放文件, 文件内存放的如果是通道均值,均值之间必须用空格分隔 mean_value: 0 0 0;0 0 0 # 图像预处理缩放比例,该数值应为浮点数 scale_value: 0.003921568627451 0.003921568627451 0.003921568627451;0.003921568627451 0.003921568627451 0.003921568627451calibration_parameters: # 模型量化的参考图像的存放目录,图片格式支持Jpeg、Bmp等格式,输入的图片 # 应该是使用的典型场景,一般是从测试集中选择20~50张图片,另外输入 # 的图片要覆盖典型场景,不要是偏僻场景,如过曝光、饱和、模糊、纯黑、纯白等图片 # 若有多个输入节点, 则应使用';'进行分隔 cal_data_dir: './calibration_data_rgbp_visible;./calibration_data_rgbp_lwir' # 如果输入的图片文件尺寸和模型训练的尺寸不一致时,并且preprocess_on为true, # 则将采用默认预处理方法(opencv resize), # 将输入图片缩放或者裁减到指定尺寸,否则,需要用户提前把图片处理为训练时的尺寸 preprocess_on: False # 模型量化的算法类型,支持kl、max,通常采用KL即可满足要求 calibration_type: 'max' # 模型的量化校准方法设置为promoter,mapper会根据calibration的数据对模型进行微调从而提高精度, # promoter_level的级别,可选的参数为-1到2,建议按照0到2的顺序实验,满足精度即可停止实验 # -1: 不进行promoter # 0:表示对模型进行轻微调节,精度提高比较小 # 1:表示相对0对模型调节幅度稍大,精度提高也比较多 # 2:表示调节比较激进,可能造成精度的大幅提高也可能造成精度下降 promoter_level: -1# 编译器相关参数compiler_parameters: # 编译策略,支持bandwidth和latency两种优化模式; # bandwidth以优化ddr的访问带宽为目标; # latency以优化推理时间为目标 compile_mode: 'latency' # 设置debug为True将打开编译器的debug模式,能够输出性能仿真的相关信息,如帧率、DDR带宽占用等 debug: False # 编译模型指定核数,不指定默认编译单核模型, 若编译双核模型,将下边注释打开即可 # core_num: 2 # 设置每个fuction call最大执行时间,单位为us # max_time_per_fc: 500 # 优化等级可选范围为O0~O3 # O0不做任何优化, 编译速度最快,优化程度最低, # O1-O3随着优化等级提高,预期编译后的模型的执行速度会更快,但是所需编译时间也会变长。 # 推荐用O2做最快验证 optimize_level: 'O2'

data_transformer.py:

import syssys.path.append("../../../01_common/python/data/")from transformer import *def data_transformer(input_shape=(672, 672)): transformers = [ PadResizeTransformer(input_shape), TransposeTransformer((2, 0, 1)), ChannelSwapTransformer((2, 1, 0)), # ScaleTransformer(1 / 255), ] return transformers

2、tools/send_tools目录下部门内容

data_loader.py

if algo_name == "yolov5": transformers = [ PadResizeTransformer((672, 672)), TransposeTransformer((2, 0, 1)), ChannelSwapTransformer((2, 1, 0)), ColorConvertTransformer('RGB', 'YUV444'), ] return transformers, 672, 672

def image_loader(image_path, algo_name, image_type): def image_load_func(x): return skimage.img_as_float( skimage.io.imread(x)).astype(np.float32) count = 0 transformers, dst_h, dst_w \ = get_transformers_by_algo_name(algo_name, image_type) ''' if algo_name == "yolov5": transformers = [ PadResizeTransformer((672, 672)), TransposeTransformer((2, 0, 1)), ChannelSwapTransformer((2, 1, 0)), ColorConvertTransformer('RGB', 'YUV444'), ] return transformers, 672, 672 ''' if algo_name == "yolov2" or algo_name == "yolov3" or algo_name == "yolov5" or algo_name == 'lenet_onnx' or algo_name == 'lenet': image_read_method = cv2.imread else: image_read_method = image_load_func image = image_read_method(image_path).astype(np.float32) org_height = image.shape[0] org_width = image.shape[1] if image.ndim != 3: # expend gray scale image to three channels image = image[..., np.newaxis] image = np.concatenate([image, image, image], axis=-1) image = [image] for tran in transformers: image = tran(image) image = image[0].transpose((1, 2, 0)).reshape(-1).astype(np.uint8) return org_height, org_width, dst_h, dst_w, image

send_image.py:

# Copyright (c) 2020 Horizon Robotics.All Rights Reserved.## The material in this file is confidential and contains trade secrets# of Horizon Robotics Inc. This is proprietary information owned by# Horizon Robotics Inc. No part of this work may be disclosed,# reproduced, copied, transmitted, or used in any way for any purpose,# without the express written permission of Horizon Robotics Inc.import google.protobufimport zmq_msg_pb2import zmqimport timeimport sysimport randomimport numpy as npimport timeimport astimport osimport argparsefrom data_loader import *class ZmqSenderClient: def __init__(self, end_point): self.context = zmq.Context() self.socket = self.context.socket(zmq.PUSH) self.socket.setsockopt(zmq.SNDHWM, 2) self.socket.connect(end_point) def __del__(self): self.socket.close() self.context.destroy() def send_image_msg(self, img, image_name, image_type, org_h, org_w, dst_h, dst_w): zmq_msg = zmq_msg_pb2.ZMQMsg() image_msg = zmq_msg.img_msg image_msg.image_data = img.tobytes() image_msg.image_width = org_w image_msg.image_height = org_h image_msg.image_name = image_name image_msg.image_format = image_type image_msg.image_dst_width = dst_w image_msg.image_dst_height = dst_h zmq_msg.msg_type = zmq_msg_pb2.ZMQMsg.IMAGE_MSG msg = zmq_msg.SerializeToString() while True: try: self.socket.send(msg, zmq.NOBLOCK) break except zmq.ZMQError: continue return 0 def over(self): time.sleep(2) while True: try: zmq_msg = zmq_msg_pb2.ZMQMsg() zmq_msg.msg_type = zmq_msg_pb2.ZMQMsg.FINISH_MSG msg = zmq_msg.SerializeToString() self.socket.send(msg, zmq.NOBLOCK) break except zmq.ZMQError: continuedef send_images(ip, algo_name, input_file_path, is_input_preprocessed, image_count, image_type): end_point = "tcp://" + ip + ":6680" print("start to send data to %s ..." % end_point) zmq_sender = ZmqSenderClient(end_point) paths = os.listdir(input_file_path) # 列出文件夹下所有的目录 if image_count > len(paths): print('image count is too large') image_count = len(paths) # TODO random select images when image_count < len(paths) path_list = np.arange(image_count) current_count = 0 for i in path_list: path = os.path.join(input_file_path, paths[i]) if os.path.isdir(path): print("input path contains a dir !!!") return if not is_input_preprocessed: org_h, org_w, dst_h, dst_w, data = image_loader( path, algo_name, image_type) image_name = paths[i].encode('utf-8') else: res = str.split(paths[i], '_') data = np.fromfile(path, dtype=np.float32) # {name}_{org_h}_{org_w}_{dst_h}_{dst_w}.bin image_name = '_'.join(res[:-4]).encode('utf-8') org_h = int(res[-4]) org_w = int(res[-3]) dst_h = int(res[-2]) dst_w = int(res[-1].split('.')[0]) t = time.time() start_time = int(round(t * 1000)) zmq_sender.send_image_msg(data, image_name, image_type, org_h, org_w, dst_h, dst_w) end_time = int(round(t * 1000)) current_count = current_count + 1 print( current_count, 'image name is: %s' % image_name.decode('utf-8'), ' send image data message done, take %d ms' % (end_time - start_time)) zmq_sender.over()if __name__ == "__main__": parser = argparse.ArgumentParser() parser.add_argument( '--algo-name', type=str, required=True, help='model type, such as mobilenetv1, mobilenetv2, ' 'yolov2, yolov3') parser.add_argument( '--input-file-path', type=str, required=True, help='input files of preprocessed dir path') parser.add_argument( '--is-input-preprocessed', type=ast.literal_eval, default=True, help= 'input image is preprocessed or not preprocessed. If is preprocessed, each file ' 'each file must be named {name}_{org_h}_{org_w}_{dst_h}_{dst_w}.bin') parser.add_argument( '--image-count', type=int, required=False, help='image count you want to eval') parser.add_argument( '--ip', type=str, required=True, help='ip of development board') parser.add_argument( '--image-type', type=int, required=False, help='image type') args = parser.parse_args() print(args) # TODO random seed send_images(args.ip, args.algo_name, args.input_file_path, args.is_input_preprocessed, args.image_count, args.image_type)

network_data_iterator.cc

bool NetworkDataIterator::Next(ImageTensor *visible_image_tensor,ImageTensor *lwir_image_tensor){ std::cout<<"network -------------------------------"<<std::endl; while (true) { int visible_ret_code = network_receiver_->RecvImage(*visible_image_tensor); int lwir_ret_code = network_receiver_->RecvImage(*lwir_image_tensor); if (visible_ret_code == TIMEOUT || lwir_ret_code == TIMEOUT) { LOG(WARNING) << "Receive image timeout"; continue; } if (visible_ret_code == FINISHED && lwir_ret_code == FINISHED) { is_finish_ = true; } else { visible_image_tensor->frame_id = NextFrameId(); lwir_image_tensor->frame_id = visible_image_tensor->frame_id; } return !(visible_ret_code == FINISHED); }}

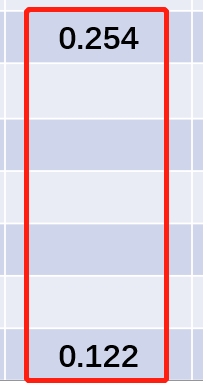

精度损失:

麻烦帮忙看下