1.芯片型号:X3派

2.天工开物开发包OpenExplorer版本:horizon_xj3_open_explorer_v2.4.2_20221227

3.问题定位:模型转换<–>板端部署

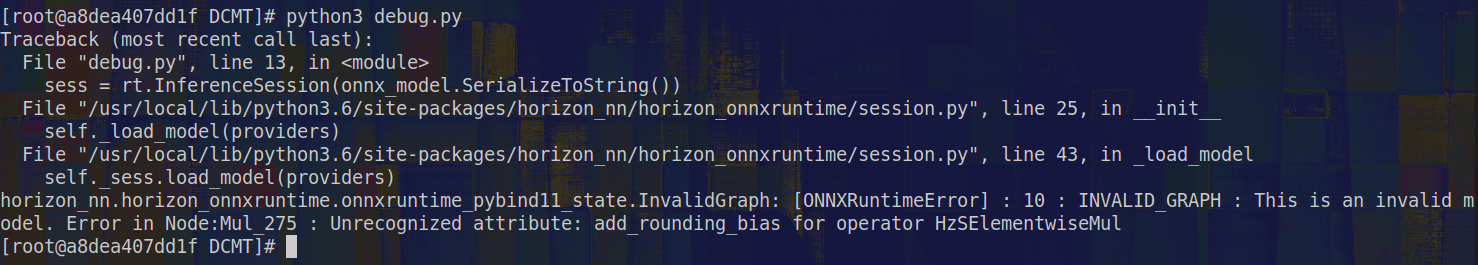

4.问题具体描述:Reports of ONNXRuntimeError are as follows:

The context of debug.py mentioned in picture is as follows:

from horizon_nn import horizon_onnximport horizon_nn.horizon_onnxruntime as rtimport numpy as np# reference: https://developer.horizon.ai/forumDetail/71036815603174578if __name__ == '__main__': #1 *.onnx load # onnx_model = horizon_onnx.load("./DCMT_original_float_model.onnx") # onnx_model = horizon_onnx.load("./DCMT_optimized_float_model.onnx") onnx_model = horizon_onnx.load("./DCMT_quantized_model.onnx") sess = rt.InferenceSession(onnx_model.SerializeToString()) input_names = [input.name for input in sess.get_inputs()] output_names = [output.name for output in sess.get_outputs()] print('input_names: ', input_names) print('output_names: ', output_names) #2 Input data # z = np.random.uniform(low=0.0, high=255.0, size=(1, 3, 127, 127)).astype(np.float32) # DCMT_original_float_model.onnx # x = np.random.uniform(low=0.0, high=255.0, size=(1, 3, 255, 255)).astype(np.float32) # DCMT_original_float_model.onnx # z = np.random.uniform(low=0.0, high=255.0, size=(1, 3, 127, 127)).astype(np.float32) # DCMT_optimized_float_model.onnx # x = np.random.uniform(low=0.0, high=255.0, size=(1, 3, 255, 255)).astype(np.float32) # DCMT_optimized_float_model.onnx z = np.random.uniform(low=0.0, high=255.0, size=(1, 127, 127, 3)).astype(np.uint8) # DCMT_quantized_model.onnx x = np.random.uniform(low=0.0, high=255.0, size=(1, 255, 255, 3)).astype(np.uint8) # DCMT_quantized_model.onnx bbox_t = np.asarray([30, 40, 100, 120]).astype(np.float32) b = np.expand_dims(np.expand_dims(np.expand_dims(bbox_t, axis=-1), axis=-1), axis=0) # (1x4x1x1) # feed_dict = {input_names[0]: z, input_names[1]: x, input_names[2]: b} # DCMT_original_float_model.onnx # feed_dict = {input_names[0]: x, input_names[1]: z, input_names[2]: b} # DCMT_optimized_float_model.onnx feed_dict = {input_names[0]: x, input_names[1]: z, input_names[2]: b} # DCMT_quantized_model.onnx #3 Run model result = sess.run(output_names, feed_dict) print(result[0].shape) print(result[1].shape) print(result[1][0, :, 0, 0])

DCMT_quantized_model.onnx can be found in the below link:

链接: 百度网盘 请输入提取码 提取码: 436i