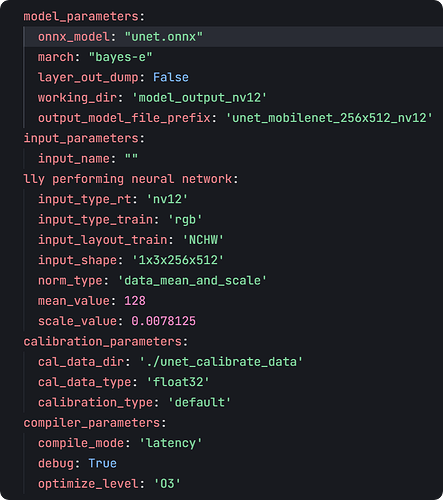

onnx模型信息如下

INPUTS: tensor:float32[1,3,256,512],

OUTPUTS: tensor:float32[1,6,256,512],

量化参数如下:

,

转换后在RDK X5板端执行命令ros2 launch dnn_node_example dnn_node_example_feedback.launch.py dnn_example_config_file:=config/unetconfig.json dnn_example_image:=config/raw_unet.jpg

检测输出如下:

[INFO] [example-1]: process has finished cleanly [pid 6602]

sunrise@ubuntu:~/model_bin/unet$ ros2 launch dnn_node_example dnn_node_example_feedback.launch.py dnn_exampl e_config_file:=config/unetconfig.json dnn_example_image:=config/raw_unet.jpg

[INFO] [launch]: All log files can be found below /home/sunrise/.ros/log/2025-06-13-15-16-16-843301-ubuntu-7 069

[INFO] [launch]: Default logging verbosity is set to INFO

dnn_node_example_path is /opt/tros/humble/lib/dnn_node_example

cp_cmd is cp -r /opt/tros/humble/lib/dnn_node_example/config .

[INFO] [example-1]: process started with pid [7072]

[example-1] [WARN] [1749798977.532958855] [dnn_example_node]: Parameter:

[example-1] feed_type(0:local, 1:sub): 0

[example-1] image: config/raw_unet.jpg

[example-1] image_type: 0

[example-1] dump_render_img: 1

[example-1] is_shared_mem_sub: 0

[example-1] config_file: config/unetconfig.json

[example-1] msg_pub_topic_name: hobot_dnn_detection

[example-1] info_msg_pub_topic_name: hobot_dnn_detection_info

[example-1] ros_img_topic_name: /image

[example-1] sharedmem_img_topic_name: /hbmem_img

[example-1] [WARN] [1749798977.533690771] [dnn_example_node]: Load [25] class types from file [config/unet.l ist]

[example-1] [WARN] [1749798977.533852979] [dnn_example_node]: Parameter:

[example-1] model_file_name: /home/sunrise/model_bin/unet/unet_mobilenet_256x512_nv12.bin

[example-1] model_name:

[example-1] [INFO] [1749798977.533950979] [dnn]: Node init.

[example-1] [INFO] [1749798977.533981396] [dnn_example_node]: Set node para.

[example-1] [WARN] [1749798977.534026812] [dnn_example_node]: model_file_name_: /home/sunrise/model_bin/unet /unet_mobilenet_256x512_nv12.bin, task_num: 4

[example-1] [INFO] [1749798977.534079395] [dnn]: Model init.

[example-1] [BPU_PLAT]BPU Platform Version(1.3.6)!

[example-1] [HBRT] set log level as 0. version = 3.15.54.0

[example-1] [DNN] Runtime version = 1.23.10_(3.15.54 HBRT)

[example-1] [A][DNN][packed_model.cpp:247][Model](2025-06-13,15:16:17.622.925) [HorizonRT] The model builder version = 1.24.3

[example-1] [INFO] [1749798977.730564112] [dnn]: The model input 0 width is 512 and height is 256

[example-1] [INFO] [1749798977.730735028] [dnn]:

[example-1] Model Info:

[example-1] name: unet_mobilenet_256x512_nv12.

[example-1] [input]

[example-1] - (0) Layout: NCHW, Shape: [1, 3, 256, 512], Type: HB_DNN_IMG_TYPE_NV12.

[example-1] [output]

[example-1] - (0) Layout: NCHW, Shape: [1, 6, 256, 512], Type: HB_DNN_TENSOR_TYPE_F32.

[example-1]

[example-1] [INFO] [1749798977.730796237] [dnn]: Task init.

[example-1] [INFO] [1749798977.732509526] [dnn]: Set task_num [4]

[example-1] [WARN] [1749798977.732605984] [dnn_example_node]: Get model name: unet_mobilenet_256x512_nv12 fr om load model.

[example-1] [INFO] [1749798977.732734692] [dnn_example_node]: The model input width is 512 and height is 256

[example-1] [WARN] [1749798977.732802067] [dnn_example_node]: Create ai msg publisher with topic_name: hobot _dnn_detection

[example-1] [INFO] [1749798977.745738510] [dnn_example_node]: Dnn node feed with local image: config/raw_une t.jpg

[example-1] [INFO] [1749798977.875056059] [dnn_example_node]: Output from frame_id: feedback, stamp: 0.0

[example-1] [INFO] [1749798977.919217004] [PostProcessBase]: out box size: 0

[example-1] [INFO] [1749798977.919309754] [ClassificationPostProcess]: out cls size: 0

[example-1] [INFO] [1749798977.919335296] [SegmentationPostProcess]: features size: 131072, width: 512, heig ht: 256, num_classes: 19, step: 1

[example-1] [INFO] [1749798977.920040628] [ImageUtils]: target size: 1

[example-1] [INFO] [1749798977.920094087] [ImageUtils]: target type: parking_space, rois.size: 0

[example-1] [WARN] [1749798977.924550123] [ImageUtils]: Draw result to file: render_feedback_0_0.jpeg

检测图片没有分割效果